The Agent Handler's OSINT Toolkit #3

OSINT Techniques & Tools for Effective HUMINT

Welcome back to The Agent Handler's OSINT Toolkit! - OSINT Tools and Techniques Applied to HUMINT operations

Subscribe now to receive valuable OSINT insights delivered straight to your inbox. Join our community of intelligence professionals and stay ahead of the curve.

Issue 3: Website Enumeration

This week may initially seem focused on cybersecurity, however there will be times during an investigation where you come across a website, either owned by or linked to your subject. Website enumeration involves identifying all the web pages, directories, and files associated with a particular domain. This can be extremely useful for finding hidden content, login portals, or sensitive information that might not be readily accessible through a simple Google search.

Let's dive into some of the tools you can use for website enumeration:

📒Basic - robots.txt

This file provides instructions to web crawlers (like search engines and automated tools) about which parts of the website they should or should not crawl, it can act as a website directory, providing a list of associated pages. By reviewing the robots.txt file, you can gain insights into which areas of the website are intended to be public and which are likely restricted.

To review a robots.txt file simply append /robots.txt to the domain name (e.g., https://www.example.com/robots.txt).

This can be a great resources for an investigator as it may list pages of a site that you might not normally be aware of. Or you may find a hidden message or image like the below from https://www.cloudflare.com/robots.txt.

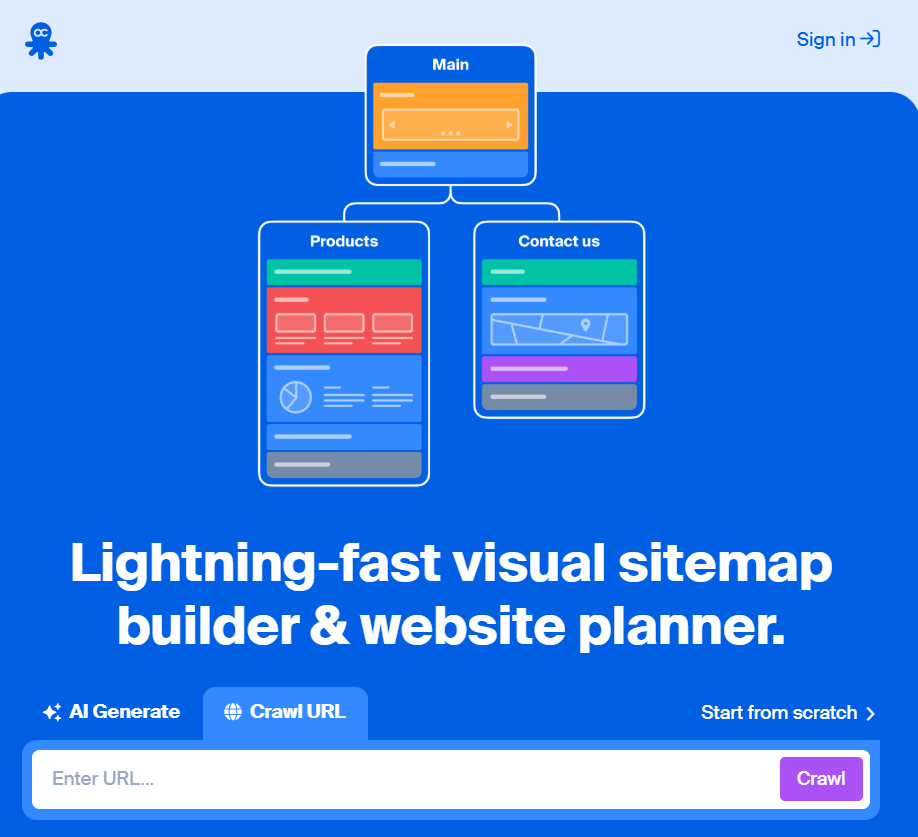

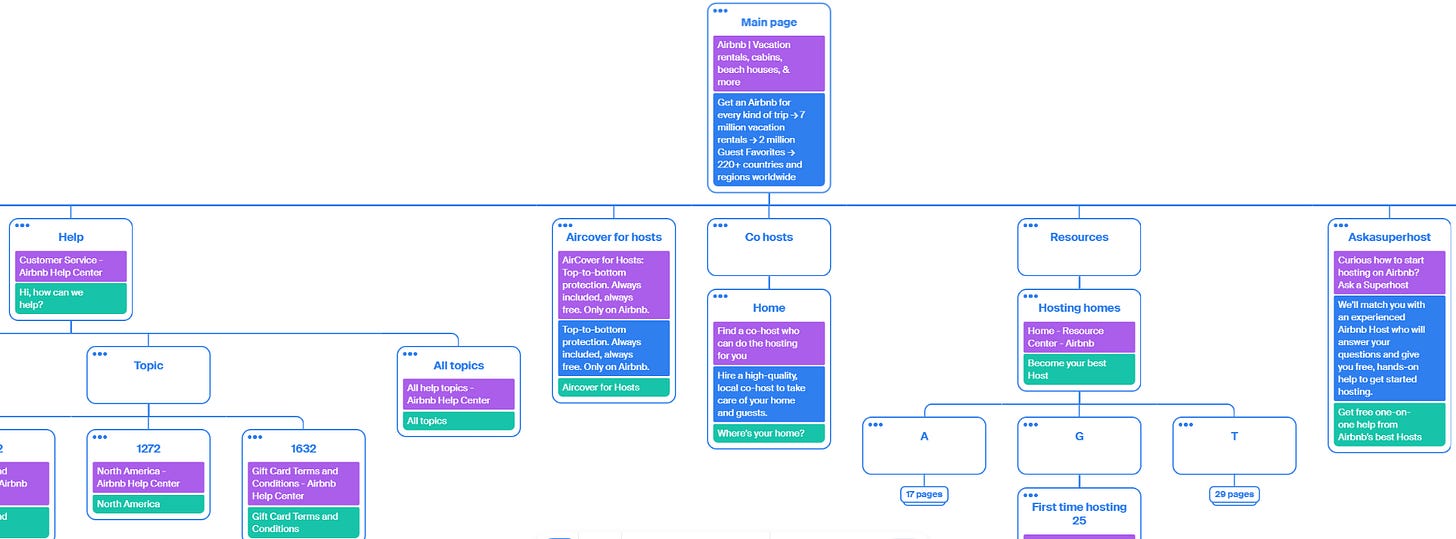

🧪Intermediate - Octopus.do

Primarily designed as a sitemap builder, this tool can be a great resource for website reconnaissance helping investigators understand a website’s structure and landscape. Octopus.do will first scan and then crawl the target website, building a map of pages.

From the above we can see various different pages of the site, allowing us to focus our investigation on the key pages.

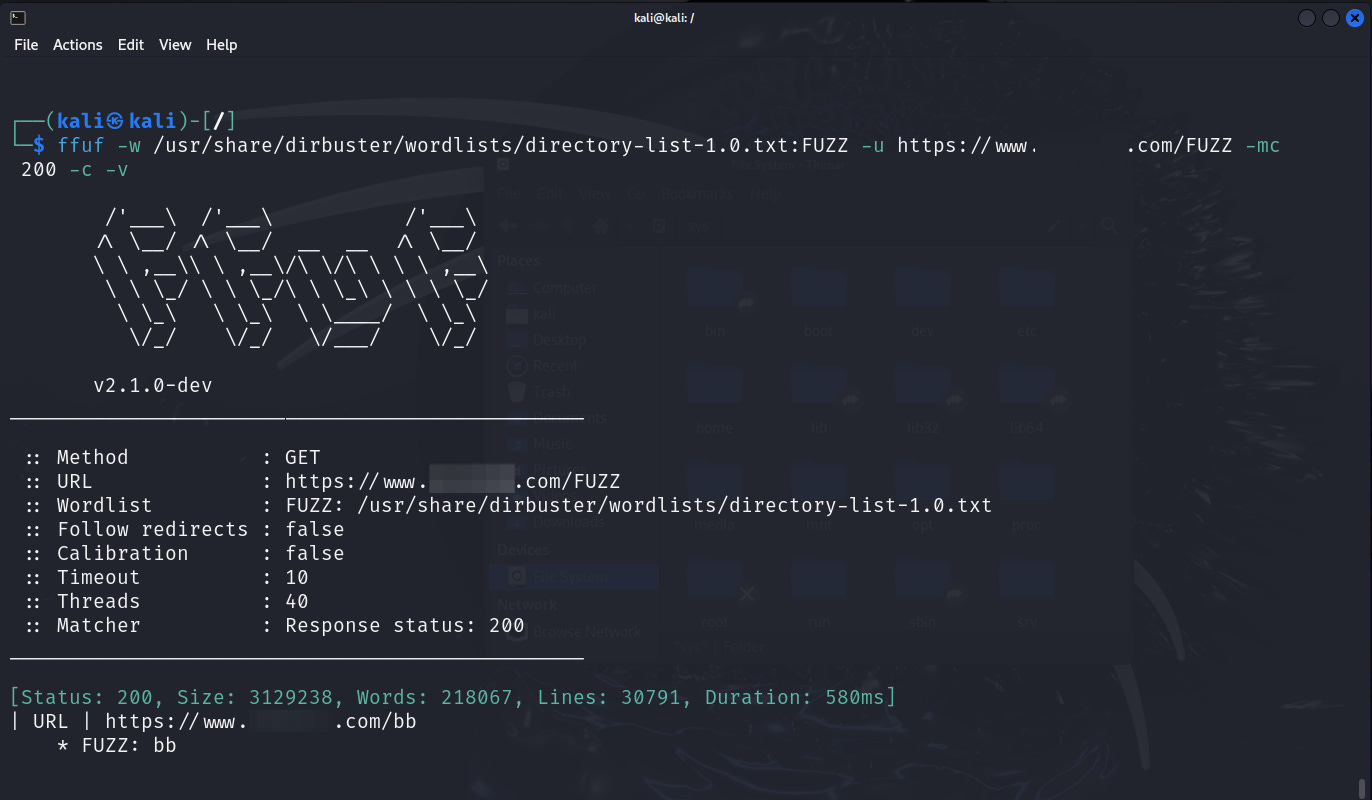

🧠Advanced - Command-Line Tools

For advanced users, several command-line tools can automate website enumeration. One such tool is ffuf, a powerful tool that can identify various resources on a website, including directories, files, and subdomains. Here's an example of using ffuf to enumerate directories on a website:

ffuf -w /path/to/wordlist.txt:FUZZ -u https://example.com/FUZZ

In this example:

ffufis the command used to invoke the tool.-w /path/to/wordlist.txtspecifies the word list containing potential directory names to be tested.-u https://www.example.comdefines the target URL.FUZZis the placeholder indicating where the words from the word list will iterate through.

Once complete you will have a list of site directories to investigate.

Remember: Always use website enumeration tools responsibly and ethically. Avoid scanning websites without proper authorization. I have used a site with an open bug bounty scheme to avoid this issue.

Disclaimer: The Agent Handler's OSINT Toolkit provides information for educational and informational purposes only. The information provided should not be considered legal or professional advice. The author and publisher of this toolkit are not responsible for any actions taken based on the information provided.